Product display

鼎丰·产品展示

服务热线:

13392518015

01

实力优势

Strength advantage

雄厚的技术实力、规范』的经营行为、严谨的工作作风。

公司拥有多种产品类型,各部︾分工明确,使产品结构设计更合理。

公司坚持"诚实、求实"的企业理念,坚持以科技为先导、以质量求生存、以诚信求发展的企业精神。

02

产品优势

products advantage

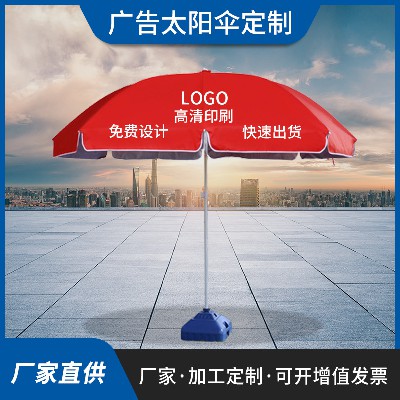

主要经营:晴雨伞、太阳伞、广告帐篷、等产品加○工定制。

多年的行╲业经验,严格选材,注重品质;牢固的伞骨更有效抵御大风,结实的伞杆№轻巧且结实,给你带来不同的体验。

03

技术优势

technology advantage

公司产品严格按照质量标准生产,产品采用扎实技卐术工序,确保产品的稳定,品质值得信赖。

公司对伞具各项◥性能指标进行严格检测,以确保产品出厂合格率达到客户满意的要求。

04

服务优势

service advantage

严谨售后团队,贴心的服务态度,真正做到售后无忧①。

为了让用户能真正了解到自己的需求,我们将有为您解决各种问题,一切以客户为先】。

关于鼎丰

主要经营:晴雨伞、太阳伞、广告帐篷、等产品加工定制

鹤山市龙口镇鼎丰雨具厂是雨伞、太阳伞、帐篷、等产品专注生产加工的公司,拥有完整、科学的〗质量管理体系。

鹤山市龙口镇鼎丰雨具厂的诚信、实力和¤产品质量获得业界的认可。欢迎各⌒ 界朋友莅临参观、指导和业务洽谈。

热线电话:

13392518015(李先生)

news Center

鼎丰·新闻中心